Polyglots & Working with Foreign Languages

This section collects several demos of working with foreign languages. Polyglots in compose.mk are roughly comparable to justfile shebang recipes6, but perhaps more similar in spirit to graal polyglots7 with less bootstrap and baggage.

Because compose.mk also adds native support for docker, including inlined Dockerfiles, you can use polyglots with arbitrary containerized interpreters, with or without customizing stock images. There's also some support for communication between polyglots. For details see the section on pipes and/or the stages documentation. For topics related to yielding control to polyglots, see the signals and supervisors docs.

Polyglots come in a few basic types and this page tries to cover all the main categories, but there's an index of more involved examples at the end.

All of these examples are in plain Makefile. For cleaner syntax and better ergonomics, check out the equivalent idioms in CMK-lang.

Local Interpreters, No Containers

There are a few ways to express simple polyglots where you want to assume the interpreter is already available on the host.

Use polyglot.dispatch/<interpreter>,<def_block_name> for arbitrary interpreters like this:

#!/usr/bin/env -S make -f

# Demos make-targets in foreign languages, without a container.

# Part of the `compose.mk` repo. This file runs as part of the test-suite.

# See also: http://robot-wranglers.github.io/compose.mk/demos/polyglots

# USAGE: ./demos/local-interpreter.mk

include compose.mk

# Look, here's a simple python script

define script.py

import sys

print('python world')

print('dollar signs are safe: $')

endef

# Minimal boilerplate to run the script, using a specific interpreter (python3).

# No container here, so this requires that the interpreter is actually available.

__main__: polyglot.dispatch/python3,script.py

# Multiple mappings for script/interprreter can co-exist,

# and using a more specific interpreter is just mentioning it.

demo.python39: polyglot.dispatch/python3.9,script.py

Note that the polyglot execution inherits the environment from make, so that passing data with environment variables is pretty easy. For shell and awk, see also the section for special guests.

Containerized Interpreters

When there's a stock image available for a container and no customization needed, there are few equivalent idioms that you can choose from, depending on your taste.

To illustrate, here are 2 ways to express a target written in elixer-lang.

#!/usr/bin/env -S make -f

# Demonstrating polyglots using elixir

# Part of the `compose.mk` repo. This file runs as part of the test-suite.

# See also: http://robot-wranglers.github.io/compose.mk/demos/polyglots

# USAGE: ./demos/elixir-1.mk

include compose.mk

# First we pick an image and interpreter for the language kernel.

elixir.img=elixir:otp-27-alpine

elixir.interpreter=elixir

# Now define the elixir code

define hello_world.ex

import IO, only: [puts: 1]

puts("elixir World!")

System.halt(0)

endef

__main__: hello_world hello_world.ex

# A decorator-style idiom:

# Name the target, then get the target-body from spec.

hello_world:; \

$(call docker.bind.script, \

def=hello_world.ex img="${elixir.img}" \

entrypoint=${elixir.interpreter})

# Alternate: Omit `def` argument if the target

# name matches the name of the code-block.

hello_world.ex:; \

$(call docker.bind.script, \

img=${elixir.img} entrypoint=${elixir.interpreter})

This example uses binding rather than importing, which is an important distinction that comes up in a few places for compose.mk. (See also: the style/idioms documentation)

For polyglot support that feels more "native", you'll probably want to use importing instead, as documented in the section for foreign code as first-class, or use the the equivalent idioms in CMK-lang.

Using lower-level polyglot helpers is also possible, and can be done with pure targets and no Makefile-functions. While it's not necessarily recommended for direct use, it does help to build intuition about how this stuff works under the hood and power-users might need extra flexibility. See the appendix for details.

Custom Containerized Interpreters

Using locally-defined containers is mostly the same as the previous example, except that a Dockerfile is inlined, and that polyglot.import, uses local_img=.. instead of img=...

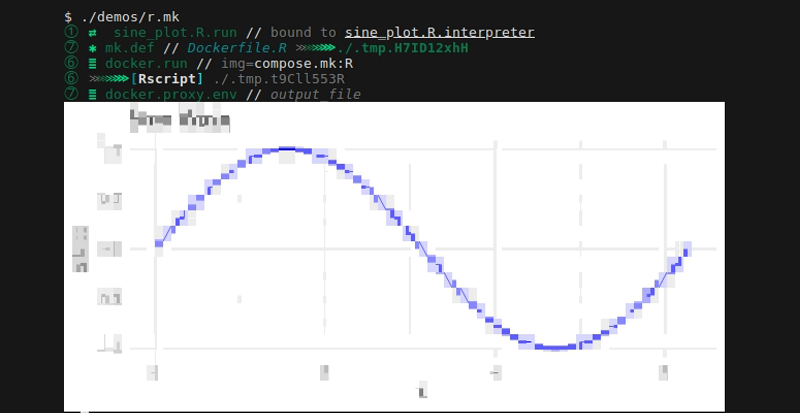

For this example, we'll use R to plot a sine-curve.

#!/usr/bin/env -S make -f

# Demonstrating polyglots using R

# Part of the `compose.mk` repo. This file runs as part of the test-suite.

# See also: http://robot-wranglers.github.io/compose.mk/demos/polyglots

# USAGE: ./demos/r.mk

include compose.mk

export output_file=.tmp.png

# Look, it's a base image with small extras for R

define Dockerfile.RBase

FROM r-base:4.3.1

RUN R -e "install.packages('ggplot2', repos='https://cran.rstudio.com/')"

endef

# Look, it's a script written in R

define sine_plot.R

library(ggplot2)

x <- seq(0, 2 * pi, length.out = 1000)

df <- data.frame(x = x, y = sin(x))

outfile <- Sys.getenv("output_file")

png(filename = outfile, width = 400, height = 200)

ggplot(df, aes(x = x, y = y)) +

geom_line(linewidth = 1, color = "blue") +

ggtitle("Sine Wave") +

xlab("x") + ylab("sin(x)") +

theme_minimal()

endef

# Creates the sine_plot.R target, with env-variable pass-through

$(call polyglot.import, def=sine_plot.R \

local_img=RBase entrypoint=Rscript env=output_file)

# Runs rscript on the polyglot, then previews output.

__main__: sine_plot.R io.preview.img/${output_file}

Above, we also use the env=.. argument to polyglot.import, indicating that we want pass-through of environment variables into the docker context. Multiple env-vars uses single-quotes, and is space-delimited.

As a bonus, this demo also includes console-friendly result previews:

See the other embedded container tutorials or the Lean 4 demo for a more involved example. That demo also shows handling an additional complication that's pretty common, i.e. where the interpreter's invocation must also be customized.

Exotic Targets & Pipes

The example below demonstrates that unix pipes still work the way you would expect with polyglot-targets.

This might be important for passing data to other languages, and by combining stage usage, and/or using structured data, it also means it's very easy to have bidirectional data flow between different languages or back and forth from compose.mk itself.

#!/usr/bin/env -S make -f

# Demos make-targets in foreign languages and shows them working with pipes.

# Part of the `compose.mk` repo. This file runs as part of the test-suite.

# USAGE: ./demos/exotic-targets.mk

include compose.mk

# A more complex python script, testing comments, indention, & using pipes

define script.py

import sys, json

input = json.loads(sys.stdin.read())

input.update(hello_python=sys.platform)

output = input

print(json.dumps(output))

for x in [1, 2, 3]:

msg=f"{x} testing loops, indents, string interpolation"

print(msg, file=sys.stderr)

endef

# Generates JSON with `jb`, passes data with a pipe,

# then parses JSON again on the python side.

__main__:

${jb} hello=bash \

| ${make} polyglot.dispatch/python3,script.py

Containerized Script+Deps with UV

For variety let's use docker.run.def/<def_block_name>, which is one of a few low level helpers. This example has a different flavor, because by using uv, we can defer to a python script for deciding both the requirements and the runtime version.

#!/usr/bin/env -S make -f

# Demonstrate polyglots with compose.mk. Python code running in a container,

# with dependencies and no venv, using uv. No caching! Note that this implicitly

# pulls not only dependencies but the python version itself is lazy and built

# just in time.

#

# Part of the `compose.mk` repo. This file runs as part of the test-suite.

# USAGE: ./demos/uv.mk

include compose.mk

# Pick an image and a interpreter for the language kernel

# https://docs.astral.sh/uv/guides/integration/docker/

uv.img=ghcr.io/astral-sh/uv:debian

uv.interpreter=uv

# Now define the script and dependencies

define uv.hello_world

#!/usr/bin/env -S uv run --script

# /// script

# requires-python = ">=3.12"

# dependencies = [

# "requests==2.31.0",

# ]

# ///

import requests

r = requests.get(

'https://httpbin.org/basic-auth/user/pass',

auth=('user', 'pass'))

print(r.status_code)

endef

# Run the code in the container, using low-level helpers.

__main__:

img=${uv.img} \

entrypoint=${uv.interpreter} \

cmd="run --script" \

def=uv.hello_world \

${make} docker.run.def

As mentioned in code comments, this bootstraps JIT and caches neither the dependencies nor the python version. Hard to say exactly where that is useful, but it is an interesting example for being an extreme kind of "late-binding".

To get UV to leverage more caching, you can use a volume. This can be done by modifying the script accordingly but also directly via the CLI by settings docker_args like this:

$ docker_args="-v ${HOME}/.cache:/root/.cache" ./demos/uv.mk

See the official docs for UV caching here9.

Compiled Languages

You could use compose.mk to create a pretty transparent compile-on-demand pipeline just before running, but that would be awkward. Usually with compiled languages you're probably better off thinking in terms of containers instead of starting from polyglots. If you insist though, here's a few tips.

Without FFI

For simple use-cases without FFI5 you can sometimes get another tool to handle the "compile on demand" thing. As long as you can find an image with an interpreter or build one in-place, you can wire stuff up pretty easily. For example, with tinycc:

#!/usr/bin/env -S make -f

# Demonstrating compiled-language polyglots in `compose.mk`.

#

# Part of the `compose.mk` repo. This file runs as part of the test-suite.

# See also: http://robot-wranglers.github.io/compose.mk/demos/polyglots

# USAGE: ./demos/tinycc.mk

include compose.mk

define Dockerfile.tcc

FROM ${IMG_DEBIAN_BASE:-debian:bookworm-slim}

RUN apt-get update -qq; apt-get install -qq -y tcc

endef

define hello_world

#include <stdio.h>

int main() {

printf("Hello, World from TinyCC!\n");

return 0;

}

endef

tcc.interpreter/%:

@# The tcc interpreter, runs the given tmp file as a script.

@# This tool insists on '.c' file extensions and won't run otherwise,

@# so this shim can't rely on usual default cleanup for input tmp file.

mv ${*} ${*}.c

cmd='tcc -run ${*}.c' ${make} mk.docker/tcc

rm ${*}.c

# Declare the code-block as an object, bind it to the interpreter.

$(call polyglot.import, def=hello_world bind=tcc.interpreter)

# Use our new scaffolded targets for `preview` and `run`

__main__: Dockerfile.build/tcc hello_world.preview hello_world

For java, try JShell, and for rust try rust-script.

With FFI

Typically the cleanest and most generic thing you can do here might involve FFI approaches5, where languages like Elixir can dip into Erlang, or Scala can dip into Java, etc.

For the sake of variety and sticking closer to the idea of compiled languages, here's C via julia's FFI, with the julia embedded in compose.mk.

#!/usr/bin/env -S make -f

# Demonstrating polyglots as first-class objects in `compose.mk`,

# plus usage of compiled languages via FFI.

#

# Part of the `compose.mk` repo. This file runs as part of the test-suite.

# See also: http://robot-wranglers.github.io/compose.mk/demos/polyglots

#

# USAGE: ./demos/julia.mk

include compose.mk

# Pick an image and interpreter for the language kernel.

julia.img=julia:1.10.9-alpine3.21

julia.interpreter=julia

# Bind the docker image / entrypoint to a target,

# then create a unary target for accepting a filename.

julia:; ${docker.image.run}/${julia.img},${julia.interpreter}

julia.interpreter/%:; ${docker.curry.command}/julia

# Next we define some Julia code that uses `ccall` for access to C.

define hello_world

println("Hello world! (from Julia & C)")

using Printf

using Statistics # For mean function

using Libdl # For dlopen, dlsym, etc.

# Get current time using C's time() function

# In Julia container, we can use libc.so.6 or use C_NULL to make Julia find it

function get_system_time()

return ccall(:time, Int64, (Ptr{Nothing},), C_NULL)

end

# Generate random numbers using rand() function and time as seed

function c_random_array(size::Int)

ccall(:srand, Nothing, (UInt32,), UInt32(get_system_time()))

result = Vector{Int}(undef, size)

for i in 1:size

result[i] = ccall(:rand, Int32, ())

end

return result

end

# Call C's math library for complex calculations

# Use dlopen to explicitly load libm.so.6, closing after use

function c_math_operations(x::Float64)

libm = Libdl.dlopen("libm.so.6")

sin_x = ccall(dlsym(libm, :sin), Float64, (Float64,), x)

log_x = ccall(dlsym(libm, :log), Float64, (Float64,), x)

sqrt_x = ccall(dlsym(libm, :sqrt), Float64, (Float64,), x)

Libdl.dlclose(libm)

return (sin=sin_x, log=log_x, sqrt=sqrt_x)

end

println("Julia C Library Integration Example\n==================================")

# Generate and analyze random numbers using C's rand

println("Random numbers from C's rand():")

rand_array = c_random_array(10)

println(" Sample: $rand_array")

println(" Mean=$(mean(rand_array)) Max=$(maximum(rand_array))")

println()

# Math operations using C's libm

x = 2.0

math_results = c_math_operations(x)

println("Math operations on x = $x:")

@printf " sin(x) = %.6f\n" math_results.sin

@printf " log(x) = %.6f\n" math_results.log

@printf " sqrt(x) = %.6f\n" math_results.sqrt

endef

# Declare the above code-block as a first class object, and bind it to an interpreter.

$(call polyglot.import, def=hello_world bind=julia.interpreter)

# Use our new scaffolded targets for script-execution and `preview`

__main__: hello_world hello_world.preview

See also the official docs for julia + C.8

Foreign Code as First Class

We've already met polyglot.import, and the lower level helpers for polyglot.dispatch, docker.bind, and polyglot.dispatch, but so far focused more on examples than discussion. See the style and idioms documentation for an overview of binding vs importing.

Amongst all these related helpers, polyglot.import is the most generic. It actually creates new targets and supports several arguments, including configurable namespacing, environment variable pass-through, and other conveniences. Read on for more detailed examples and discussion. And if you're all in on polyglotting, make sure to check out the equivalent idioms in CMK-lang.

Bind Blocks to Containerized Interpreters

First-class support for foreign code works by using one of the polyglot.bind.* functions, and then several related targets are attached to the implied namespace. In the simplest case, a code block only gets a preview target, which is capable of showing syntax-highlighted code. (Under the hood, this uses a dockerized version of pygments).

A more typical use-case passes the name of the code block involved, plus the details of an interpreter, which allows us to provide an additional <code>.run method.

#!/usr/bin/env -S make -f

# Demonstrating first-class support for foreign code-blocks in `compose.mk`.

# Part of the `compose.mk` repo. This file runs as part of the test-suite.

# USAGE: ./demos/code-objects.mk

include compose.mk

# First we pick an image and interpreter for the language kernel.

python.img=python:3.11-slim-bookworm

python.interpreter=python

# Define the python code

define hello_world

import sys

print(f'hello world, from {sys.version_info}')

endef

# Import the code-block, creating additional target scaffolding for it.

$(call polyglot.import, def=hello_world \

img=${python.img} entrypoint=${python.interpreter})

# With the new target-scaffolding in place, now we can use it.

# First we preview the code with syntax highlighting,

# then run the code inside the bound interpreter.

__main__: hello_world.preview hello_world

Bind Blocks to Targets

Sometimes you can't use an image/interpreter directly as seen above, because maybe you need to pass arguments to the interpreter or otherwise customize its startup. In this case the solution is to set up a unary target as a shim, i.e. something that accepts a filename and does the rest of whatever you need.

We've already seen an example of this earlier with Julia, where polyglot.import uses arguments like def=<codeblock_name> bind=<target_name>. In this example we'll additionally pass arguments for namespace, to control where our new run and preview targets are created, and plus an argument for env, to control what environment variables from the host are passed through to the polyglot.

#!/usr/bin/env -S make -f

# Demonstrating first-class support for foreign code-blocks in `compose.mk`.

#

# Part of the `compose.mk` repo. This file runs as part of the test-suite.

# USAGE: ./demos/code-objects.mk

include compose.mk

# Look, it's a python script

define hello_world.py

import os

print(f'hello {os.environ["planet"]} {os.environ["index"]}')

endef

# Pick an image and interpreter for the language kernel.

# Uses a stock-image but modifies the default invocation.

python.img=python:3.11-slim-bookworm

my_interpreter/%:

cmd="python -O -B ${*}" \

${make} docker.image.run/${python.img}

# Constants we can share with subprocesses or polyglots

export planet=earth

export index=616

# Import the code-block to a specific namespace,

# creating scaffolding for `run` and `preview`, while

# passing through certain parts of this environment

$(call polyglot.import, \

def=hello_world.py bind=my_interpreter \

namespace=WORLD env='planet index')

# With the new target-scaffolding in place, now we can use it.

# First we preview the code with syntax highlighting,

# then run the code inside the bound interpreter.

__main__: WORLD.preview WORLD

See also the lean demo, which needs a similar shim to modify the interpreter invocation.

Binding Multiple Blocks in One Shot

A variation in this pattern is to use polyglots.bind.*. Note that in this version, the word polyglots is plural! This version supports binding multiple chunks of code to the same interpreter.

#!/usr/bin/env -S make -f

# Demonstrating first-class support for foreign code-blocks in `compose.mk`.

#

# Part of the `compose.mk` repo. This file runs as part of the test-suite.

# USAGE: ./demos/code-objects.mk

include compose.mk

# Pick an image and interpreter for the language kernel,

# using a target to customize the default interpreter invocation.

python.img=python:3.11-slim-bookworm

my_interpreter/%:

cmd="python -O -B ${*}" \

${make} docker.image.run/${python.img}

# Multiple blocks of python code

define two.py

import sys

print(f'hello world, from {sys.version_info}')

for i in range(3):

print(f" count{i}")

endef

define one.py

import sys

print([sys.platform, sys.argv])

endef

# Bind multiple code blocks, creating scaffolding for each

$(call polyglots.import, pattern=[.]py bind=my_interpreter)

# Test the scaffolded targets

__main__: one.py two.py

In the example above, we passed [.]py, which pattern-matches against all available define-block names and binds each of them to python.interpreter.

Templating Languages

Use-cases related to templating are arguably more about embedded data, which is documented elsewhere. Still, this goes together well with the example above, because if you have one template you might have many.

Here we use jinjanator, a CLI for use with jinja, but the approach itself can be extended to work with any other templating engine. 4

#!/usr/bin/env -S make -f

# Demonstrates templating in jinja.

#

# Part of the `compose.mk` repo. This file runs as part of the test-suite.

# USAGE: ./demos/j2-templating.mk

include compose.mk

define Dockerfile.jinjanator

FROM python:3.11-slim-bookworm

RUN pip install jinjanator --break-system-packages

endef

define hello_template.j2

{% for i in range(3) %}

hello {{name}}! ( {{loop.index}} )

{% endfor %}

endef

define bye_template.j2

bye {{name}}!

endef

# An "interpreter" for templates.

# This renders the given template file using JSON on stdin.

render/%:

cmd="--quiet -fjson ${*} /dev/stdin" \

${make} mk.docker/jinjanator,jinjanate

# Import the template-block, binding the

# interpreter & creating `*.j2` targets

$(call polyglots.import, pattern=[.]j2 bind=render)

# Generates JSON with `jb`, then pushes it into the template renderer.

# This shows how to call targets using generated symbols,

# and then (equivalently) using explicit targets.

__main__: Dockerfile.build/jinjanator

${jb} name=foo | ${hello_template.j2}

${jb} name=foo | ${bye_template.j2}

${jb} name=foo | ${make} bye_template.j2

This prints "hello foo!" x3 and one "bye foo!" as expected, per the template. In keeping with other structured IO patterns in compose.mk, we prefer JSON, generating it with jb and configuring jinjanator to use it.. but this can be easily changed to work with environment variables or yaml instead.

Special Guests

For practical reasons there are 2 specific languages that have the status of being special guests, i.e. bash and awk. Technically this is a special case of Local Interpreters, No Containers, but it's worth mentioning separately since this can be really useful, and because the interpreters are usually available whenever make is.

compose.import.script

In the case of bash, the simplest way to convert a script into a target is to use the compose.import.script macro.

#!/usr/bin/env -S make -f

# Demonstrating import shell-script to target.

# Part of the `compose.mk` repo. This file runs as part of the test-suite.

# See also: http://robot-wranglers.github.io/compose.mk/demos/polyglots

# USAGE: ./demos/script-dispatch-stock.mk

include compose.mk

# Look, here's a simple shell script

define script.sh

set -x

printf "multiline stuff\n"

for i in $(seq 2); do

echo "Iteration $i"

done

endef

# Directly imports script to target of same name.

$(call compose.import.script, def=script.sh)

__main__:

$(call log.test, Running embedded script)

${make} script.sh | grep 'Iteration 2'

This compose.import.script macro is convenient but it comes up frequently that you might want lower-level access with more flexibility (like with piped stdin, or script-arguments). See the next section for details.

Using io.awk and io.bash

Meet io.awk/<def_name> and io.bash/<def_name,optional_args>

Use-cases include things like:

-

Fast or sophisticated string-manipulation. Internally,

io.awkis frequently used in cases wheremakeis simply not up to necessary string-manipulation task, but using something like a python-stack via docker is just too slow. *(Most of the compiler is built this way.) -

Porting existing shell, piecewise. Typically

io.bashis a stepping stone in a process of moving existing bash scripts towards native make-targets. You can simply embed any small existing script, incorporate it into existing automation, and avoid immediately translating bashisms to makeisms until you finish a proof of concept. You can also think ofio.bashas a direct way to turn existing scripts into pseudo-functions.

See the test-suite below for usage hints.

#!/usr/bin/env -S make -f

# Demonstrating "special guest" polyglots in awk/bash.

# Part of the `compose.mk` repo. This file runs as part of the test-suite.

# See also:

# * http://robot-wranglers.github.io/compose.mk/demos/polyglots

# * demos/script-dispatch-host.mk

# USAGE: ./demos/guests.mk

include compose.mk

# Runs all of the test.* targets

__main__: flux.timer/flux.star/test.

define echo.awk

{print $0}

endef

test.io.awk:

$(call log.test, Run the embedded awk script on stdin)

echo foo bar | ${io.awk}/echo.awk | grep "foo bar"

define echo.sh

cat /dev/stdin

endef

test.io.bash.stdin:

$(call log.test, Run the embedded bash script on stdin)

echo foo bar | ${io.bash}/echo.sh | grep "foo bar"

define tmp.sh

echo hello ${1} ${2}

endef

test.io.bash.args:

$(call log.test, Run the embedded bash with arguments)

${io.bash}/tmp.sh,foo,bar | grep "foo bar"

define broken.sh

exit 1

endef

test.io.bash.broken:

$(call log.test, Check exit status for broken script)

! ${io.bash}/broken.sh

As you can see above, both kinds of guests support piped input by default, and io.bash optionally supports argument-proxy. As usual for polyglots, content inside define-blocks isn't messed with, and we don't need to double-escape $.

Other Polyglot Examples

That's it for the basic taxonomy of different polyglots. Again, if you want a much more native look and feel for this stuff and aren't committed to staying backwards compatible with classic Makefile, then it's easy to adapt these examples to the much cleaner CMK-lang style polyglots.

For bigger demos that are related, see also these examples which are more concrete and closer to real life:

Although it isn't exactly a polyglot, the Notebooking Demo is also related and adds support to jupyter for several languages.

Implementation Details

Tip

The mk.def.* targets always leave the data inside the define-blocks completely untouched, meaning that there's no requirement for escaping the contents. Strings, tabs, spaces, and things like $ are always left alone.

This also means the content is fairly static, i.e. no pre-execution templating. This is a feature! It discourages JIT code-generation in favor of more structured interactions via parameter-passing, environment variables, pipes, etc.

It comes up sometimes that people want to have polyglots with pure make, not for building weird hybrid applications in the compose.mk sense, but just to get decent string manipulation abilities or similar. Historically the suggested approaches involved sketchy .ONESHELL hacks1, but comes with several issues.

The approach used by compose.mk still has some limitations3, but it is overall much more robust and reliable than previous approaches, and easily supports multiple polyglots side-by-side, and alongside more traditional targets. Most of this stuff hinges on multi-line define-blocks, plus the ability of compose.mk to handle some reflection. (See the API for mk.* for more details.)

Appendix

Low-level Helpers

Low-level polyglot helpers are not necessarily for direct use, but it does help build intuition about how this stuff works, and power-users might need extra flexibility. For illustration purposes:

#!/usr/bin/env -S make -f

# Demonstrating polyglots using elixir (low-level helpers)

# Part of the `compose.mk` repo. This file runs as part of the test-suite.

# See also: http://robot-wranglers.github.io/compose.mk/demos/polyglots

# USAGE: ./demos/elixir-1.mk

include compose.mk

# First we pick an image and interpreter for the language kernel.

# Here, iex can work too, but there are minor differences.

elixir.img=elixir:otp-27-alpine

elixir.interpreter=elixir

elixir=${elixir.img},${elixir.interpreter}

# Now define the elixir code

define hello_world

import IO, only: [puts: 1]

puts("elixir World!")

System.halt(0)

endef

__main__: alt1 alt2 alt3

# Now show three equivalent ways to run the code inside the container

alt1:

@# Classic style invocation, using simplest available helper

img=${elixir.img} entrypoint=${elixir.interpreter} \

def=hello_world ${make} docker.run.def

alt2:

@# Another way, using piping and streams

${mk.def.read}/hello_world \

| ${stream.to.docker}/${elixir}

alt3:

@# Fully manual style, handling your own temp files,

@# and sticking to targets instead of using pipes

${mk.def.to.file}/hello_world/temp-file

cmd=temp-file ${make} docker.image.run/${elixir}

rm -f temp-file

Behind the scenes both alt1 and alt2 are just abstracting away the boring details of "happens in a container" and "create/clean a temp file" 2. In alt3, you can see a more explicit version that might be useful for clarity, but note that this leaves temp files around after errors.

References

-

You might think from the elixir examples that this can be abstracted away to use streams somehow, but we opt to avoid it because some interpreters require actual files. ↩

-

Yeah, sorry, you might be out of luck if your language of choice actually uses

defineandendefkeywords. If you think you have a reasonable use-case, please do create an issue describing it. ↩ -

For minimal basic templating without an extra container, you could use the local-interpreter strategy with

m4, which is usually present anywheremakeis. ↩