Cluster Lifecycle Demo

Note

For more background information, make sure to check out the demos overview page.

This demo shows an example of a project-local kubernetes cluster lifecycle. It orchestrates usage of several items from the toolbox such as k3d, kubectl, helm, ansible, and promtool to install and exercise things like Prometheus and Grafana. See also the overview of demos for information about k8s-tools demos in general, and see also the official docs for the kube-prom stack1.

Basic Usage

Running the demo is simple:

# Default entrypoint runs clean, create,

# deploy, test, but does not tear down the cluster.

$ ./demos/cluster-lifecycle.mk

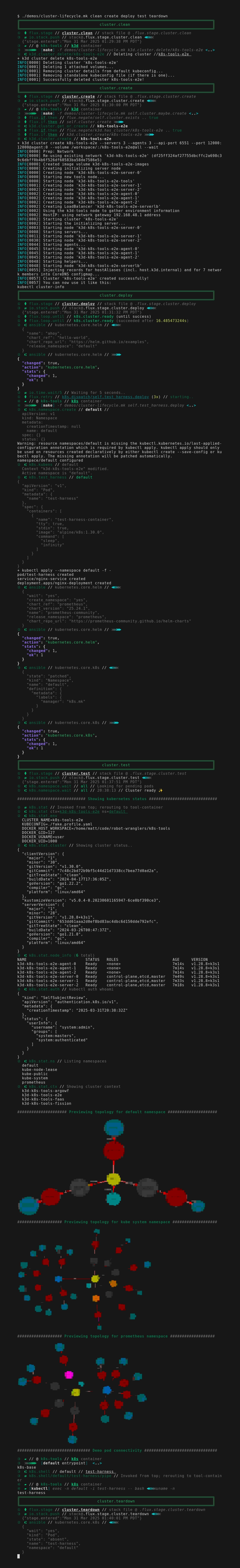

One particularly interesting feature you can see above is the graphical preview of pod/sevice topologies from various namespaces. Topology previews are console-friendly and also work from CI/CD like github-actions. This allows you to visually parse the results of complex orchestration very quickly. At this high level of detail you won't be able tell specifics, but it's pretty useful for showing whether results have changed.

Interactive Workflows

# End-to-end, again without teardown

$ ./demos/cluster-lifecycle.mk clean create deploy test

# Interactive shell for a cluster pod

$ ./demos/cluster-lifecycle.mk cluster.shell

# Finally, teardown the cluster

$ ./demos/cluster-lifecycle.mk teardown

..

Grafana and Prometheus were already installed via the deploy.grafana target, which runs as a prerequisite for the main deploy target. This setup also includes prometheus, and uses the standard charts1. But so far we haven't exercised or interacted with the installation. Let's take a look at the common interactive cluster workflows.

It's a good idea to start with usage of cluster.wait, which is just an alias for the k8s.wait target, and ensures that all pods are ready. This isn't strictly necessary since the automation does it already, but it's a good reminder that the whole internal API for k8s.mk is automatically available as a CLI.

$ ./demos/cluster-lifecycle.mk cluster.wait

.. lots of output ..

See the demo source for the implementation of deploy.grafana. It's not too surprising, but as usual, we interact with helm without installing it explicitly, without assuming that it's installed, and without writing docker run ... everywhere.

One interesting thing about the approach taken with deploy.grafana is that it allows for custom helm-values without an external file, and also without a ton of awkward CLI overrides, by just inlining a small file that would normally cause a lot of annoying context switching. See grafana.helm.values.

Port Forwarding

Let's use kubefwd to setup port-forwarding to actually look at grafana. Since kubefwd is basically baked into k8s-tools.yml and has existing helpers in k8s.mk, our demo source code only needs to set some configuration and then call the library.

Using kubefwd is nicer than kubectl port-forward in that you should actually get working DNS. Using kubefwd via k8s.mk has the added benefits that it handles aspects of setup / shutdown automatically to make it more idempotent. (Hence the docker.stop stuff at the beginning of the logs below.)

$ ./demos/cluster-lifecycle.mk fwd.grafana

≣ docker.stop // kubefwd.k8s-tools.prometheus.grafana

≣ docker.stop // No containers found

⑆ kubefwd // prometheus // grafana

{

"namespace": "prometheus",

"svc": "grafana"

}

⑆ kubefwd // container=kubefwd.k8s-tools.prometheus.grafana

⑆ kubefwd // cmd=kubefwd svc -n prometheus -f metadata.name=grafana --mapping 80:8089 -v

⇄ flux.timeout.sh (3s) // docker logs -f kubefwd.k8s-tools.prometheus.grafana

INFO[11:17:29] _ _ __ _

INFO[11:17:29] | | ___ _| |__ ___ / _|_ ____| |

INFO[11:17:29] | |/ / | | | '_ \ / _ \ |_\ \ /\ / / _ |

INFO[11:17:29] | <| |_| | |_) | __/ _|\ V V / (_| |

INFO[11:17:29] |_|\_\\__,_|_.__/ \___|_| \_/\_/ \__,_|

INFO[11:17:29]

INFO[11:17:29] Version 1.22.5

INFO[11:17:29] https://github.com/txn2/kubefwd

INFO[11:17:29]

INFO[11:17:29] Press [Ctrl-C] to stop forwarding.

INFO[11:17:29] 'cat /etc/hosts' to see all host entries.

INFO[11:17:29] Loaded hosts file /etc/hosts

INFO[11:17:29] HostFile management: Backing up your original hosts file /etc/hosts to /root/hosts.original

INFO[11:17:29] Successfully connected context: k3d-k8s-tools-e2e

DEBU[11:17:29] Registry: Start forwarding service grafana.prometheus.k3d-k8s-tools-e2e

DEBU[11:17:29] Resolving: grafana to 127.1.27.1 (grafana)

INFO[11:17:29] Port-Forward: 127.1.27.1 grafana:8089 to pod grafana-5bfc75d5b4-gwxqs:3000

⇄ flux.timeout.sh (3s) // finished

⇄ Connect with: http://admin:prom-operator@grafana:8089

As advertised in the output above, the forwarding should work as advertised outside of the cluster, literally using grafana:8081 in the browser of your choice and not "localhost". To shut down the tunnel, just use ./demos/cluster-lifecycle.mk fwd.grafana.stop.

Tests that exercise the Grafana API tests haven't been run yet, because testing services from inside the cluster isn't ideal. Testing from outside like this is better, but does require curl on the docker host.

Running tests looks like this:

$ ./demos/cluster-lifecycle.mk test.grafana

⇄ Testing Grafana API

{

"id": 25,

"uid": "b0a9d1f2-6150-4b49-b82c-7267af628ce7",

"orgId": 1,

"title": "Prometheus / Overview",

"uri": "db/prometheus-overview",

"url": "/d/b0a9d1f2-6150-4b49-b82c-7267af628ce7/prometheus-overview",

"slug": "",

"type": "dash-db",

"tags": ["prometheus-mixin"],

"isStarred": false,

"sortMeta": 0,

"isDeleted": false

}

Batteries Included, Room to Grow

As mentioned previously, the whole internal API for k8s.mk is automatically available as a CLI, and we've already seen that the ./demos/cluster-lifecycle.mk script basically ties that API to this cluster, which can now be tailored to your own project.

The compose.mk API isn't tied to the "local" project in quite the same way, but is generally useful for automation, and especially automation with docker and docker-compose.

Here'few random examples of other stuff that ./demos/cluster-lifecycle.mk can already do for you, just by virtue of importing functionality from those libraries.

Via k8s.mk API:

# Show all pods

$ ./demos/cluster-lifecycle.mk k8s.pods

# Show pods in the `monitoring` namespace

$ ./demos/cluster-lifecycle.mk k8s.pods/monitoring

# Details for everything deployed with helm

$ ./demos/cluster-lifecycle.mk helm.stat

# Delete all k3d clusters (not just project-local one)

$ ./demos/cluster-lifecycle.mk k3d.purge

# Details for all k3d clusters

$ ./demos/cluster-lifecycle.mk k3d.stat

Via compose.mk API:

# Version info for docker and docker compose

$ ./demos/cluster-lifecycle.mk docker.stat

# Stop all containers

$ ./demos/cluster-lifecycle.mk docker.stop.all

# Version info for `make`

$ ./demos/cluster-lifecycle.mk mk.stat

For many of these tasks you might wonder, why not just use kubectl directly? Well, k8s.mk isn't intended to replace that, and if it's actually available on your host, you can of course KUBECONFIG=local.cluster.yml kubectl .. but let's take a moment to look at all the other things this demo accomplished.

- We avoided directly messing with

kubectxto set cluster details - We avoided breakage when that one guy has another KUBECONFIG exported in their bash profile

- We avoided messing with kubernetes namespaces by using

k8s.kubensas a context-manager. - We didn't have to remember the details of

kubectl execinvocation - We used an abstracted, centralized, and idempotent version of

k3d cluster create

In particular, we not only automated the cluster bootstrap and interactions, we also avoided installing helm, kubectl, k3d, jq, jb, ansible, and about a dozen other tools. We also avoided writing instructions on installing those things. And we avoided conflicting versions for all those things on different people's workstations.

Until we decide we're ready for it.. we also got to skip a lot of hassle with extra files, extra repos, or extra context-switching just for small manifests like the test-harness pod, or for small changes to helm-values.

At the same time, we accomplished some very useful decoupling. This automation isn't tied to any of the specifics of our CI/CD platform, or to any dedicated dev-cluster where we might want end-to-end tests, but it still works for both of those use-cases, and it's fast enough for local development. We also avoided shipping a "do everything" magical omnibus container so that our whole kit still retains a lot of useful modularity.

Source Code

#!/usr/bin/env -S make -f

#░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░

# Demonstrating full cluster lifecycle automation with k8s-tools.git.

# This exercises `compose.mk`, `k8s.mk`, plus the `k8s-tools.yml` services to

# interact with a small k3d cluster. Verbs include: create, destroy, deploy, etc.

#

# See the documentation here[1] for more discussion.

# This demo ships with the `k8s-tools` repo and runs as part of the test-suite.

#

# USAGE:

# ./demos/cluster-lifecycle.mk clean create deploy test

#

# REF:

# [1] https://robot-wranglers.github.io/k8s-tools/demos/cluster-lifecycle

#░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░

# Boilerplate section.

# Ensures local KUBECONFIG exists & ignores anything from environment

# Sets cluster details that will be used by k3d.

# Generates target-scaffolding for k8s-tools.yml services

# Setup the default target that will do everything, end to end.

#░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░

include k8s.mk

export KUBECONFIG:=./local.cluster.yml

$(shell umask 066; touch ${KUBECONFIG})

$(call compose.import, file=k8s-tools.yml)

__main__: clean create deploy test

# Cluster lifecycle basics. These are similar for all demos,

# and mostly just setting up CLI aliases for existing targets.

# The `stage` are just announcing sections, `k3d.*` are library calls.

#░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░

cluster.name=lifecycle-demo

clean: stage/cluster.clean k3d.cluster.delete/${cluster.name}

create: stage/cluster.create k3d.cluster.get_or_create/${cluster.name}

wait: k8s.cluster.wait

test: stage/cluster.test infra.test app.test

# Local cluster details and main automation.

#░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░

pod_name=test-harness

pod_namespace=default

# Main deployment entrypoint: First, announce the stage, then wait for cluster

# to be ready. Then run a typical example operation with helm with retries,

# then setup labels and a test-harness, and the prometheus stack

deploy: \

stage/deploy \

flux.retry/3/deploy.helm \

deploy.test_harness \

deploy.labels \

deploy.grafana

deploy.labels:

@# Add a random label to the default namespace

kubectl label namespace default foo=bar

# Run a typical deployment with helm. Using `helm` directly is

# possible, but ansible is often nicer for idempotent operations.

# This uses a container and can be called directly from the host..

# so there is no need to install ansible *or* helm. For more

# direct usage, see the grafana deploy later in this file.

deploy.helm:

${json.from} name=ahoy \

chart_ref=hello-world \

release_namespace=default \

chart_repo_url="https://helm.github.io/examples" \

| ${make} ansible.helm

# Here `deploy.test_harness` has a public interface chaining to a "private"

# target for internal use. The public interface just runs the private one

# inside the `k8s` container, which makes it safe to use `kubectl` directly

# even though it might not be present on the host. The cluster should be

# already setup but we retry a maximum of 3 times anyway just for illustration

# purposes.

deploy.test_harness: flux.retry/3/k8s.dispatch/self.test_harness.deploy

self.test_harness.deploy: \

k8s.kubens.create/${pod_namespace} \

k8s.test_harness/${pod_namespace}/${pod_name} \

kubectl.apply/demos/data/nginx.svc.yml

# Simulate some infrastructure testing after setup is done.

# This just shows the cluster's pod/service topology

infra.test:

label="Showing kubernetes status" \

${make} io.print.banner k8s.stat

label="Previewing topology for default namespace" \

${make} io.print.banner k8s.graph.tui/default/pod

label="Previewing topology for kube-system namespace" \

${make} io.print.banner k8s.graph.tui/kube-system/pod

# Simulate some application testing after setup is done.

# This pulls data from a pod container deployed to the cluster,

# shows an overview of whatever's already been deployed with helm.

app.test:

label="Demo pod connectivity" ${make} io.print.banner

# echo uname -n | ${make} kubectl.exec.pipe/${pod_namespace}/${pod_name}

label="Helm Overview" ${make} io.print.banner helm.stat

# Grafana setup and other optional, more interactive stuff that's part of

# the demo documentation. User can opt-in and walk through this part manually

# We include the grafana.ini below just to demonstrate passing custom values

# to the helm chart. For more configuration details see kube-prom-stack[1],

# grafana's docs [2]. More substantial config here might involve custom

# dashboarding with this [3]

#

# [1] https://github.com/prometheus-community/helm-charts

# [2] https://grafana.com/docs/grafana/latest/setup-grafana/configure-grafana/

# [3] https://docs.ansible.com/ansible/latest/collections/grafana/grafana/dashboard_module.html

#░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░

# Custom values to pass to helm, just to show we can.

# See `deploy.grafana` for usage, see [2] for docs

define grafana.helm.values

grafana:

grafana.ini:

analytics:

check_for_updates: false

grafana_net:

url: https://grafana.net

log:

mode: console

paths:

data: /var/lib/grafana/

logs: /var/log/grafana

plugins: /var/lib/grafana/plugins

provisioning: /etc/grafana/provisioning

endef

# NB: Default password is preset by the charts. Real usage should override

# but with grafana, your best bet is jumping straight into oauth. There are

# more than 5 years of github issues and forum traffic about fixes, regressions,

# conflicting info and general confusion for setting non-default admin passwords

# programmatically. Want an experimental setup that avoids auth completely?

# Those docs are also lies!

grafana.password=prom-operator

grafana.port_map=80:${grafana.host_port}

grafana.host_port=8089

grafana.url_base=http://grafana:${grafana.host_port}

grafana.chart.version=72.2.0

grafana.chart.url=https://prometheus-community.github.io/helm-charts

grafana.namespace=monitoring

grafana.pod_name=prometheus-stack-grafana

# Deployment for grafana. Use helm directly this time instead of via ansible.

# Since the host might not have `helm` or not be using a standard version, we

# run inside the helm container. Note the usage of `grafana.helm.values` to

# provide custom values to helm, without any need for an external file.

deploy.grafana:; $(call containerized.maybe, helm)

.deploy.grafana: stage/Grafana k8s.kubens.create/${grafana.namespace}

$(call io.log, ${bold}Deploying Grafana)

( helm repo add prometheus-community ${grafana.chart.url} \

&& helm repo update \

&& ${mk.def.read}/grafana.helm.values | ${stream.peek} \

| helm install prometheus-stack \

prometheus-community/kube-prometheus-stack \

--namespace ${grafana.namespace} --create-namespace \

--version ${grafana.chart.version} \

--values /dev/stdin \

&& ${make} helm.stat ) | ${stream.as.log}

# Starts port-forwarding for grafana webserver. Working external DNS from host

fwd.grafana:

kubefwd_mapping="${grafana.port_map}" ${make} kubefwd.start/${grafana.namespace}/${grafana.pod_name}

$(call io.log, Connect with: http://admin:prom-operator@grafana:${grafana.host_port}\n)

fwd.grafana.stop: kubefwd.stop/stop/${grafana.namespace}/${grafana.pod_name}

# Runs all the grafana tests, some from inside the cluster, some from outside.

test.grafana: test.grafana.basic test.grafana.api

# Another public/private pair of targets.

# The public one is safe to run from the host, and just calls the private target

# from inside a tool container. Since `.test.grafana.basic` runs in a container,

# it's safe to use kubectl (and lots of other tools) without assuming they are

# available on the host. Also note the usage of `k8s.kubens/..` prerequisite to

# set the kubernetes namespace that's used for the rest of the target body.

test.grafana.basic:; $(call containerized.maybe, kubectl)

.test.grafana.basic: k8s.kubens/monitoring

kubectl get pods

# Tests the grafana webserver and API, using the default authentication,

# from the host. Note that this requires kubefwd tunnel has already been

# setup using the `fwd.grafana` target, and that the docker-host actually

# has curl! First request grabs cookies for auth and the second uses them.

test.grafana.api:

$(call io.log, ${bold}Testing Grafana API)

${io.mktemp} \

&& curl -sS -c $${tmpf} -X POST ${grafana.url_base}/login \

-H "Content-Type: application/json" \

-d '{"user":"admin", "password":"${grafana.password}"}' \

&& curl -sS -b $${tmpf} ${grafana.url_base}/api/search \

| ${jq} '.[]|select(.title|contains("Prometheus"))' \

| ${stream.as.log}

# Opens an interactive shell into the test-pod.

# This requires that `deploy.test_harness` has already run.

cluster.shell: wait k8s.pod.shell/${pod_namespace}/${pod_name}